|

FFmpeg

|

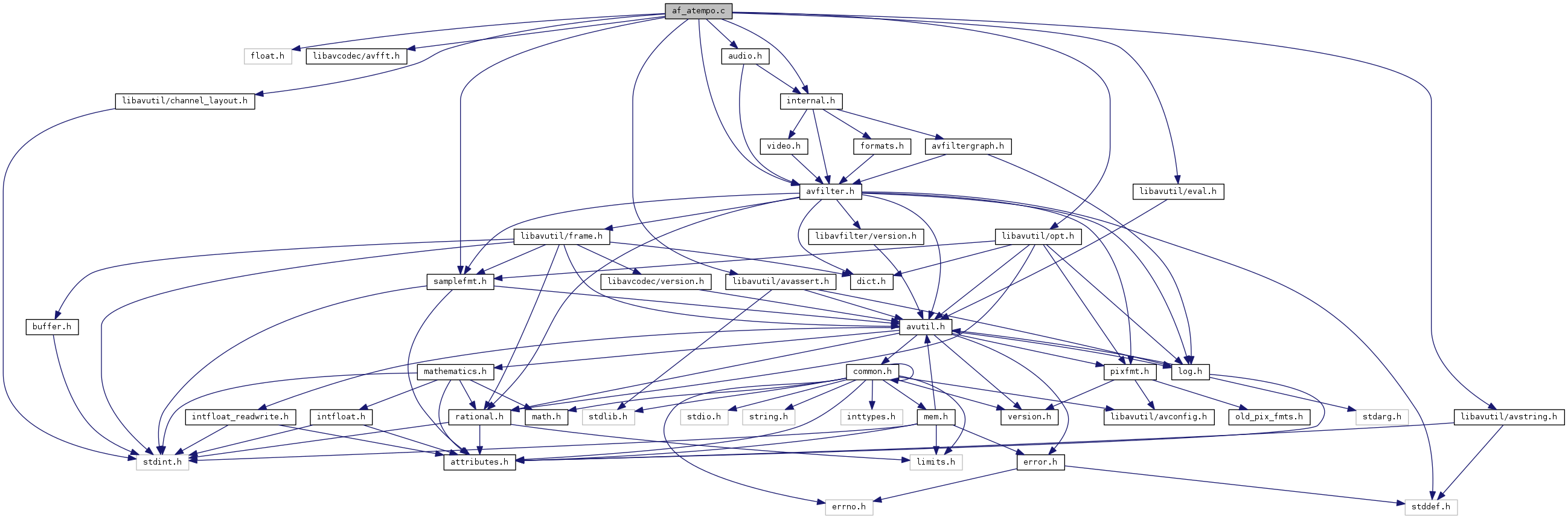

tempo scaling audio filter – an implementation of WSOLA algorithm More...

#include <float.h>#include "libavcodec/avfft.h"#include "libavutil/avassert.h"#include "libavutil/avstring.h"#include "libavutil/channel_layout.h"#include "libavutil/eval.h"#include "libavutil/opt.h"#include "libavutil/samplefmt.h"#include "avfilter.h"#include "audio.h"#include "internal.h"

Go to the source code of this file.

Data Structures | |

| struct | AudioFragment |

| A fragment of audio waveform. More... | |

| struct | ATempoContext |

| Filter state machine. More... | |

Macros | |

| #define | OFFSET(x) offsetof(ATempoContext, x) |

| #define | RE_MALLOC_OR_FAIL(field, field_size) |

| #define | yae_init_xdat(scalar_type, scalar_max) |

| A helper macro for initializing complex data buffer with scalar data of a given type. More... | |

| #define | yae_blend(scalar_type) |

| A helper macro for blending the overlap region of previous and current audio fragment. More... | |

Enumerations | |

| enum | FilterState { YAE_LOAD_FRAGMENT, YAE_ADJUST_POSITION, YAE_RELOAD_FRAGMENT, YAE_OUTPUT_OVERLAP_ADD, YAE_FLUSH_OUTPUT } |

| Filter state machine states. More... | |

Functions | |

| AVFILTER_DEFINE_CLASS (atempo) | |

| static void | yae_clear (ATempoContext *atempo) |

| Reset filter to initial state, do not deallocate existing local buffers. More... | |

| static void | yae_release_buffers (ATempoContext *atempo) |

| Reset filter to initial state and deallocate all buffers. More... | |

| static int | yae_reset (ATempoContext *atempo, enum AVSampleFormat format, int sample_rate, int channels) |

| Prepare filter for processing audio data of given format, sample rate and number of channels. More... | |

| static int | yae_set_tempo (AVFilterContext *ctx, const char *arg_tempo) |

| static AudioFragment * | yae_curr_frag (ATempoContext *atempo) |

| static AudioFragment * | yae_prev_frag (ATempoContext *atempo) |

| static void | yae_downmix (ATempoContext *atempo, AudioFragment *frag) |

| Initialize complex data buffer of a given audio fragment with down-mixed mono data of appropriate scalar type. More... | |

| static int | yae_load_data (ATempoContext *atempo, const uint8_t **src_ref, const uint8_t *src_end, int64_t stop_here) |

| Populate the internal data buffer on as-needed basis. More... | |

| static int | yae_load_frag (ATempoContext *atempo, const uint8_t **src_ref, const uint8_t *src_end) |

| Populate current audio fragment data buffer. More... | |

| static void | yae_advance_to_next_frag (ATempoContext *atempo) |

| Prepare for loading next audio fragment. More... | |

| static void | yae_xcorr_via_rdft (FFTSample *xcorr, RDFTContext *complex_to_real, const FFTComplex *xa, const FFTComplex *xb, const int window) |

| Calculate cross-correlation via rDFT. More... | |

| static int | yae_align (AudioFragment *frag, const AudioFragment *prev, const int window, const int delta_max, const int drift, FFTSample *correlation, RDFTContext *complex_to_real) |

| Calculate alignment offset for given fragment relative to the previous fragment. More... | |

| static int | yae_adjust_position (ATempoContext *atempo) |

| Adjust current fragment position for better alignment with previous fragment. More... | |

| static int | yae_overlap_add (ATempoContext *atempo, uint8_t **dst_ref, uint8_t *dst_end) |

| Blend the overlap region of previous and current audio fragment and output the results to the given destination buffer. More... | |

| static void | yae_apply (ATempoContext *atempo, const uint8_t **src_ref, const uint8_t *src_end, uint8_t **dst_ref, uint8_t *dst_end) |

| Feed as much data to the filter as it is able to consume and receive as much processed data in the destination buffer as it is able to produce or store. More... | |

| static int | yae_flush (ATempoContext *atempo, uint8_t **dst_ref, uint8_t *dst_end) |

| Flush any buffered data from the filter. More... | |

| static av_cold int | init (AVFilterContext *ctx) |

| static av_cold void | uninit (AVFilterContext *ctx) |

| static int | query_formats (AVFilterContext *ctx) |

| static int | config_props (AVFilterLink *inlink) |

| static int | push_samples (ATempoContext *atempo, AVFilterLink *outlink, int n_out) |

| static int | filter_frame (AVFilterLink *inlink, AVFrame *src_buffer) |

| static int | request_frame (AVFilterLink *outlink) |

| static int | process_command (AVFilterContext *ctx, const char *cmd, const char *arg, char *res, int res_len, int flags) |

Variables | |

| static const AVOption | atempo_options [] |

| static const AVFilterPad | atempo_inputs [] |

| static const AVFilterPad | atempo_outputs [] |

| AVFilter | avfilter_af_atempo |

Detailed Description

tempo scaling audio filter – an implementation of WSOLA algorithm

Based on MIT licensed yaeAudioTempoFilter.h and yaeAudioFragment.h from Apprentice Video player by Pavel Koshevoy. https://sourceforge.net/projects/apprenticevideo/

An explanation of SOLA algorithm is available at http://www.surina.net/article/time-and-pitch-scaling.html

WSOLA is very similar to SOLA, only one major difference exists between these algorithms. SOLA shifts audio fragments along the output stream, where as WSOLA shifts audio fragments along the input stream.

The advantage of WSOLA algorithm is that the overlap region size is always the same, therefore the blending function is constant and can be precomputed.

Definition in file af_atempo.c.

Macro Definition Documentation

| #define OFFSET | ( | x | ) | offsetof(ATempoContext, x) |

Definition at line 151 of file af_atempo.c.

| #define RE_MALLOC_OR_FAIL | ( | field, | |

| field_size | |||

| ) |

Definition at line 225 of file af_atempo.c.

Referenced by yae_reset().

| #define yae_blend | ( | scalar_type | ) |

A helper macro for blending the overlap region of previous and current audio fragment.

Definition at line 719 of file af_atempo.c.

Referenced by yae_overlap_add().

| #define yae_init_xdat | ( | scalar_type, | |

| scalar_max | |||

| ) |

A helper macro for initializing complex data buffer with scalar data of a given type.

Definition at line 344 of file af_atempo.c.

Referenced by yae_downmix().

Enumeration Type Documentation

| enum FilterState |

Filter state machine states.

| Enumerator | |

|---|---|

| YAE_LOAD_FRAGMENT | |

| YAE_ADJUST_POSITION | |

| YAE_RELOAD_FRAGMENT | |

| YAE_OUTPUT_OVERLAP_ADD | |

| YAE_FLUSH_OUTPUT | |

Definition at line 76 of file af_atempo.c.

Function Documentation

| AVFILTER_DEFINE_CLASS | ( | atempo | ) |

|

static |

Definition at line 1018 of file af_atempo.c.

|

static |

Definition at line 1058 of file af_atempo.c.

|

static |

Definition at line 963 of file af_atempo.c.

|

static |

Definition at line 1141 of file af_atempo.c.

|

static |

Definition at line 1032 of file af_atempo.c.

Referenced by filter_frame(), and request_frame().

|

static |

Definition at line 977 of file af_atempo.c.

|

static |

Definition at line 1097 of file af_atempo.c.

|

static |

Definition at line 971 of file af_atempo.c.

|

static |

Adjust current fragment position for better alignment with previous fragment.

- Returns

- alignment correction.

Definition at line 687 of file af_atempo.c.

Referenced by yae_apply(), and yae_flush().

|

static |

Prepare for loading next audio fragment.

Definition at line 577 of file af_atempo.c.

Referenced by yae_apply().

|

static |

Calculate alignment offset for given fragment relative to the previous fragment.

- Returns

- alignment offset of current fragment relative to previous.

Definition at line 633 of file af_atempo.c.

Referenced by yae_adjust_position().

|

static |

Feed as much data to the filter as it is able to consume and receive as much processed data in the destination buffer as it is able to produce or store.

Definition at line 811 of file af_atempo.c.

Referenced by filter_frame().

|

static |

Reset filter to initial state, do not deallocate existing local buffers.

Definition at line 165 of file af_atempo.c.

Referenced by yae_release_buffers(), and yae_reset().

|

inlinestatic |

Definition at line 330 of file af_atempo.c.

Referenced by yae_adjust_position(), yae_advance_to_next_frag(), yae_apply(), yae_flush(), yae_load_frag(), and yae_overlap_add().

|

static |

Initialize complex data buffer of a given audio fragment with down-mixed mono data of appropriate scalar type.

Definition at line 394 of file af_atempo.c.

Referenced by yae_apply(), and yae_flush().

|

static |

Flush any buffered data from the filter.

- Returns

- 0 if all data was completely stored in the dst buffer, AVERROR(EAGAIN) if more destination buffer space is required.

Definition at line 885 of file af_atempo.c.

Referenced by request_frame().

|

static |

Populate the internal data buffer on as-needed basis.

- Returns

- 0 if requested data was already available or was successfully loaded, AVERROR(EAGAIN) if more input data is required.

Definition at line 422 of file af_atempo.c.

Referenced by yae_load_frag().

|

static |

Populate current audio fragment data buffer.

- Returns

- 0 when the fragment is ready, AVERROR(EAGAIN) if more input data is required.

Definition at line 497 of file af_atempo.c.

Referenced by yae_apply(), and yae_flush().

|

static |

Blend the overlap region of previous and current audio fragment and output the results to the given destination buffer.

- Returns

- 0 if the overlap region was completely stored in the dst buffer, AVERROR(EAGAIN) if more destination buffer space is required.

Definition at line 756 of file af_atempo.c.

Referenced by yae_apply(), and yae_flush().

|

inlinestatic |

Definition at line 335 of file af_atempo.c.

Referenced by yae_adjust_position(), yae_advance_to_next_frag(), and yae_overlap_add().

|

static |

Reset filter to initial state and deallocate all buffers.

Definition at line 203 of file af_atempo.c.

Referenced by uninit(), and yae_reset().

|

static |

Prepare filter for processing audio data of given format, sample rate and number of channels.

Definition at line 239 of file af_atempo.c.

Referenced by config_props().

|

static |

Definition at line 309 of file af_atempo.c.

Referenced by process_command().

|

static |

Calculate cross-correlation via rDFT.

Multiply two vectors of complex numbers (result of real_to_complex rDFT) and transform back via complex_to_real rDFT.

Definition at line 599 of file af_atempo.c.

Referenced by yae_align().

Variable Documentation

|

static |

Definition at line 1151 of file af_atempo.c.

|

static |

Definition at line 153 of file af_atempo.c.

|

static |

Definition at line 1161 of file af_atempo.c.

| AVFilter avfilter_af_atempo |

Definition at line 1170 of file af_atempo.c.

Generated by

1.8.11

1.8.11